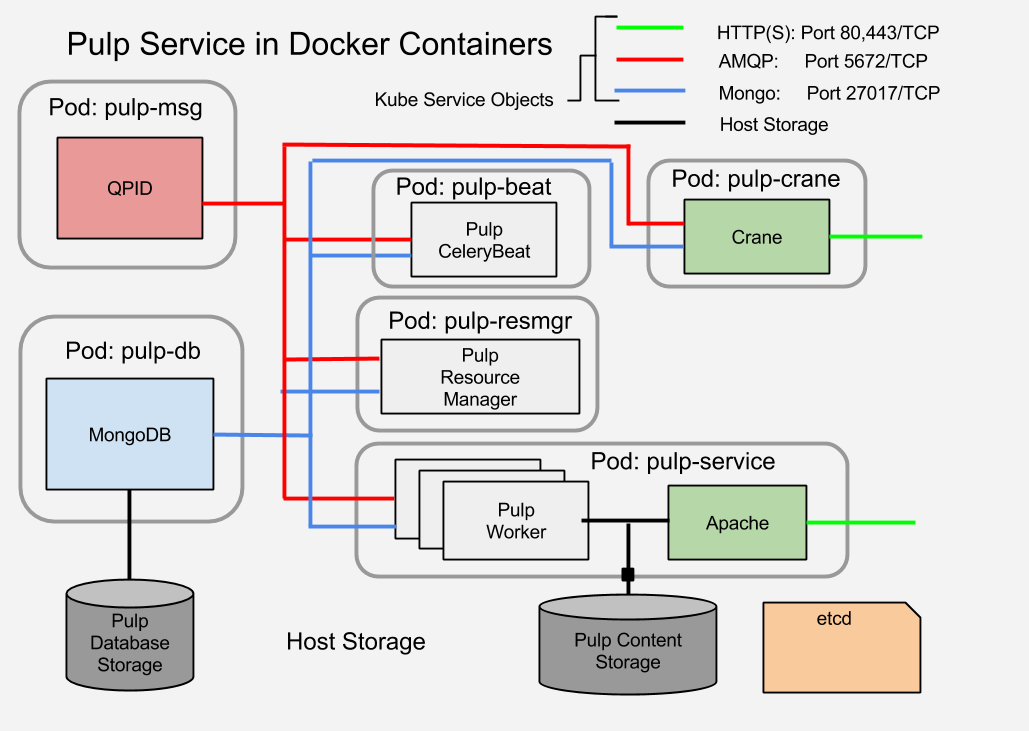

A complete Pulp service

The Pulp service is composed of a number of sub-services:

- A MongoDB database

- A QPID AMQP message broker

- A number of Celery processes

- 1 Celery Beat process

- 1 Pulp Resource Manager (Celery worker) process

- >1 Pulp worker (Celery worker) process

- >1 Apache HTTPD - serves mirrored content to clients

- >1 Crane service - Docker plugin for Pulp

This diagram illustrates the components and connectivity of a Pulp service as it will be composed in Kubernetes using Docker containers.

|

| Pulp Service Component Structure |

The simplest images will be those for the QPID and MongoDB services. I'm going to show how to create the MongoDB image first.

There are several things I will not be addressing in this simple example:

- HA and replication

In production the MongoDB would be replicated

In production the QPID AMQP service would have a mesh of brokers - Communications Security

In production the links between components to the MongoDB and the QPID message broker would be encrypted and authenticated.

Key management is actually a real problem with Docker at the moment and will require its own set of discussions.

A Docker container for MongoDB

This post essentially duplicates the instructions for creating a MongoDB image which are provided on the Docker documentation site. I'm going to walk through them here for several reasons. First is for completeness and for practice on the basics of creating a simple image. Second, the Docker example uses Ubuntu for the base image. I am going to use Fedora. In later posts I'm going to be doing some work with Yum repos and RPM installation. Finally I'm going to make some notes which are relevant to the suitability of a container for use in a Kubernetes cluster.

Work Environment

I'm working on Fedora 20 with the docker-io package installed and the docker service enabled and running. I've also added my username to the docker group in /etc/group so I don't need to use sudo to issue docker commands. If your work environment differs you'll probably have to adapt some.

Defining the Container: Dockerfile

New docker images are defined in a Dockerfile. Capitalization matters in the file name. The Dockerfile must reside in a directory of its own. Any auxiliary files that the Dockerfile may reference will reside in the same directory.The syntax for a Dockerfile is documented on the Docker web site.

This is the Dockerfile for the MongDB image in Fedora 20:

That's really all it takes to define a new container image. The first two lines are the only ones that are mandatory for all Dockerfiles. The rest form the description of the new container.

Dockerfile: FROM

Line 1 indicates the base image to begin with. It refers to an existing image on the official public Docker registry. This image is offered and maintained by the Fedora team. I specify the Fedora 20 version. If I had left the version tag off, the Dockerfile would use the latest tagged image available.

Dockerfile: MAINTAINER

Line 2 gives contact information for the maintainer of the image definition.

Diversion:

Lines 4 and 5 are an unofficial comment. It's a fragment of JSON which contains some information about how the image is meant to be used.

Dockerfile: RUN

Line 7 is where the real fun begins. The RUN directive indicates that what follows is a command to be executed in the context of the base image. It will make changes or additions which will be captured and used to create a new layer. In fact, every directive from here on out creates a new layer. When the image is run, the layers are composed to form the final contents of the container before executing any commands within the container.

The shell command which is the value of the RUN directive must be treated by the shell as a single line. If the command is too long to fit in an 80 character line then shell escapes (\<cr>) and conjunctions (';' or '&&' or '||') are used to indicate line continuation just as if you were writing into a shell on the CLI.

This particular line installs the mongodb-server package and then cleans up the YUM cache. This last is required because any differences in the file tree from the begin state will be included in the next image layer. Cleaning up after YUM prevents including the cached RPMs and metadata from bloating the layer and the image.

Line 10 is another RUN statement. This one prepares the directory where the MongoDB storage will reside. Ordinarily this would be created on a host when the MongoDB package is installed with a little more during the startup process for the daemon. They're here explicitly because I'm going to punch a hole in the container so that I can mount the data storage area from host. The mount process can overwrite some of the directory settings. Setting them explicitly here ensures that the directory is present and the permissions are correct for mounting the external storage.

Dockefile: ADD

Line 14 adds a file to the container. In this case it's a slightly tweaked mongodb.conf file. It adds a couple of switches which the Ubuntu example from the Docker documentation applies using CLI arguments to the docker run invocation. The ADD directive takes the input file from the directory containing the Dockerfile and will overwrite the destination file inside the container.

Lines 16-22 don't add new content but rather describe the run-time environment for the contents of the container.

Dockerfile: VOLUME

Line 16 officially declares that the directory /var/lib/mongodb will be used as a mountpoint for external storage.

Dockerfile: EXPOSE

Line 18 declares that TCP port 21017 will be exposed. This will allow connections from outside the container to access the mongodb inside.

Dockerfile: USER

Line 20 declares that the first command executed will be run as the mongodb user.

Dockerfile: WORKDIR

Line 22 declares that the command will will run in /var/lib/mongodb, the home directory for the mongodb user.

Dockerfile: CMD

The last line of the Dockerfile traditionally describes the default command to be executed when the container starts.

Line 24 uses the CMD directive. The arguments are an array of strings which make up the program to be invoked by default on container start.

Building the Docker Image

With the Dockerfile and the mongodb.conf template in the image directory (in my case, the directory is images/mongodb) I'm ready to build the image. The transcript for the build process is pretty long. This one I include in its entirety so you can see all of the activity that results from the Dockerfile directives.

docker build -t markllama/mongodb images/mongodb Sending build context to Docker daemon 4.096 kB Sending build context to Docker daemon Step 0 : FROM fedora:20 Pulling repository fedora 88b42ffd1f7c: Download complete 511136ea3c5a: Download complete c69cab00d6ef: Download complete ---> 88b42ffd1f7c Step 1 : MAINTAINER Mark Lamourine---> Running in 38db2e5fffbb ---> fc120ab67c77 Removing intermediate container 38db2e5fffbb Step 2 : RUN yum install -y mongodb-server && yum clean all ---> Running in 42e55f18d490 Resolving Dependencies --> Running transaction check ---> Package mongodb-server.x86_64 0:2.4.6-1.fc20 will be installed --> Processing Dependency: v8 for package: mongodb-server-2.4.6-1.fc20.x86_64 ... Installed: mongodb-server.x86_64 0:2.4.6-1.fc20 ... Complete! Cleaning repos: fedora updates Cleaning up everything ---> 8924655bac6e Removing intermediate container 42e55f18d490 Step 3 : RUN mkdir -p /var/lib/mongodb && touch /var/lib/mongodb/.keep && chown -R mongodb:mongodb /var/lib/mongodb ---> Running in 88f5f059c3ff ---> f8e4eaed6105 Removing intermediate container 88f5f059c3ff Step 4 : ADD mongodb.conf /etc/mongodb.conf ---> eb358bbbaf75 Removing intermediate container 090e1e36f7f6 Step 5 : VOLUME [ "/var/lib/mongodb" ] ---> Running in deb3367ff8cd ---> f91654280383 Removing intermediate container deb3367ff8cd Step 6 : EXPOSE 27017 ---> Running in 0c1d97e7aa12 ---> 46157892e3fe Removing intermediate container 0c1d97e7aa12 Step 7 : USER mongodb ---> Running in 70575d2a7504 ---> 54dca617b94c Removing intermediate container 70575d2a7504 Step 8 : WORKDIR /var/lib/mongodb ---> Running in 91759055c498 ---> 0214a3fbcafc Removing intermediate container 91759055c498 Step 9 : CMD [ "/usr/bin/mongod", "--quiet", "--config", "/etc/mongodb.conf", "run"] ---> Running in 6b48f1489a3e ---> 13d97f81beb4 Removing intermediate container 6b48f1489a3e Successfully built 13d97f81beb4

You can see how each directive in the Dockerfile corresponds to a build step, and you can see the activity that each directive generates.

When docker processes a Dockerfile what it really does first is to put the base image in a container and run it but execute a command in that container based on the first Docker file directive. Each directive causes some change to the contents of the container.

A Docker container is actually composed of a set of file trees that are layered using a read-only union filesystem with a read/write layer on the top. Any changes go into the top layer. When you unmount the underying layers, what remains in the read/write layer are the changes caused by the first directive. When building a new image the changes for each directive are archived into a tarball and checksummed to produce the new layer and the layer's ID.

This process is repeated for each directive, accumulating new layers until all of the directives have been processed. The intermediate containers are deleted, the new layer files are saved and tagged. The end result is a new image (a set of new layers).

Running the Mongo Container

This simplest test is to for the new container is to try running it and observing what happens.

docker run --name mongodb1 --detach --publish-all markllama/mongodb a90b275d00d451fde4edd9bc99798a4487815e38c8efbe51bfde505c17d920ab

This invocation indicates that docker should run the image named markllama/mongodb. When it does, it should detach (run as a daemon) and make all of the network ports exposed by the container available to the host. (that's the --publish-all). It will name the newly created container mongodb1 so that you can distinguish it from other instances of the same image. It also allows you to refer to the container by name rather than needing the ID hash all the time. If you don't provide a name, docker will assign one from some randomly selected words.

The response is a hash which is the full ID of the new running container. Most times you'll be able to get away with a shorter version of the hash (as presented by docker ps. See below) or by the container name.

Examining the Running Container(s)

So the container is running. There's a MongoDB waiting for for a connection. Or is there? How can I tell and how can I figure out how to connect to it?

Docker offers a number of commands to view various aspects of the running containers.

To list the running containers use docker ps.

Listing the Running Containers.

docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a90b275d00d4 markllama/mongodb:latest /usr/bin/mongod --qu 5 mins ago Up 5 min 0.0.0.0:49155->27017/tcp mongodb1

This line will likely wrap unless you have a wide screen.

In this case there is only one running container. Each line is a summary report on a single container. The important elements for now are the name, id and the ports summary. This last tells me that I should be able to connect from the host to the container MongoDB using localhost:49155 which is forward to the container's exposed port 27017

For Docker commands which apply to single containers the final argument is either the ID or name of a container. Since I named the mongodb container I can use the name to access it.

This is just what I'd expect for a running mongod.

If I know the name of the container or its ID I can request the port information explicitly. This is useful when the output must be parsed, perhaps by a program that will create another container needing to connect to the database.

In this case there is only one running container. Each line is a summary report on a single container. The important elements for now are the name, id and the ports summary. This last tells me that I should be able to connect from the host to the container MongoDB using localhost:49155 which is forward to the container's exposed port 27017

What did it do on startup?

A running container has one special process which is sort of like the init process on a host. That's the process indicated by the CMD or ENTRYPOINT directive in the Dockerfile.

When the container starts, the STDOUT of the initial process is connected to the the docker service. I can retrieve the output by requesting the logs.

For Docker commands which apply to single containers the final argument is either the ID or name of a container. Since I named the mongodb container I can use the name to access it.

docker logs mongodb1

Thu Aug 28 20:38:08.496 [initandlisten] MongoDB starting : pid=1 port=27017 dbpath=/var/lib/mongodb 64-bit host=a90b275d00d4

Thu Aug 28 20:38:08.498 [initandlisten] db version v2.4.6

Thu Aug 28 20:38:08.498 [initandlisten] git version: nogitversion

Thu Aug 28 20:38:08.498 [initandlisten] build info: Linux buildvm-12.phx2.fedoraproject.org 3.10.9-200.fc19.x86_64 #1 SMP Wed Aug 21 19:27:58 UTC 2013 x86_64 BOOST_LIB_VERSION=1_54

Thu Aug 28 20:38:08.498 [initandlisten] allocator: tcmalloc

Thu Aug 28 20:38:08.498 [initandlisten] options: { command: [ "run" ], config: "/etc/mongodb.conf", dbpath: "/var/lib/mongodb", nohttpinterface: "true", noprealloc: "true", quiet: true, smallfiles: "true" }

Thu Aug 28 20:38:08.532 [initandlisten] journal dir=/var/lib/mongodb/journal

Thu Aug 28 20:38:08.532 [initandlisten] recover : no journal files present, no recovery needed

Thu Aug 28 20:38:10.325 [initandlisten] preallocateIsFaster=true 26.96

Thu Aug 28 20:38:12.149 [initandlisten] preallocateIsFaster=true 27.5

Thu Aug 28 20:38:14.977 [initandlisten] preallocateIsFaster=true 27.58

Thu Aug 28 20:38:14.977 [initandlisten] preallocateIsFaster check took 6.444 secs

Thu Aug 28 20:38:14.977 [initandlisten] preallocating a journal file /var/lib/mongodb/journal/prealloc.0

Thu Aug 28 20:38:16.165 [initandlisten] preallocating a journal file /var/lib/mongodb/journal/prealloc.1

Thu Aug 28 20:38:17.306 [initandlisten] preallocating a journal file /var/lib/mongodb/journal/prealloc.2

Thu Aug 28 20:38:18.603 [FileAllocator] allocating new datafile /var/lib/mongodb/local.ns, filling with zeroes...

Thu Aug 28 20:38:18.603 [FileAllocator] creating directory /var/lib/mongodb/_tmp

Thu Aug 28 20:38:18.629 [FileAllocator] done allocating datafile /var/lib/mongodb/local.ns, size: 16MB, took 0.008 secs

Thu Aug 28 20:38:18.629 [FileAllocator] allocating new datafile /var/lib/mongodb/local.0, filling with zeroes...

Thu Aug 28 20:38:18.637 [FileAllocator] done allocating datafile /var/lib/mongodb/local.0, size: 16MB, took 0.007 secs

Thu Aug 28 20:38:18.640 [initandlisten] waiting for connections on port 27017

This is just what I'd expect for a running mongod.

Just the Port Information please?

If I know the name of the container or its ID I can request the port information explicitly. This is useful when the output must be parsed, perhaps by a program that will create another container needing to connect to the database.

docker port mongodb1 27017 0.0.0.0:49155

But is it working?

Docker thinks there's something running. I have enough information now to try connecting to the database itself. From the host I can try connecting to the database itself.

The ports information indicates that the container port 27017 is forward to the host "all interfaces" port 49155. If the host firewall allows connections in on that port the database could be used (or attacked) from outside.

echo "show dbs" | mongo localhost:49155 MongoDB shell version: 2.4.6 connecting to: localhost:49155/test local 0.03125GB bye

What next?

At this point I have verified that I have a running MongoDB accessible from the host (or outside if I allow).

There's lots more that you can do and query about the containers using the docker CLI command, but there's no need to detail it all here. You can learn more from the Docker documentation web site

Before I start on the Pulp service proper I also need a QPID service container. This is very similar to the MongoDB container so I won't go into detail.

Since the point of the exercise is to run Pulp in Docker with Kubernetes, the next step will be to run the MongoDB and QPID containers using Kubernetes.

Since the point of the exercise is to run Pulp in Docker with Kubernetes, the next step will be to run the MongoDB and QPID containers using Kubernetes.